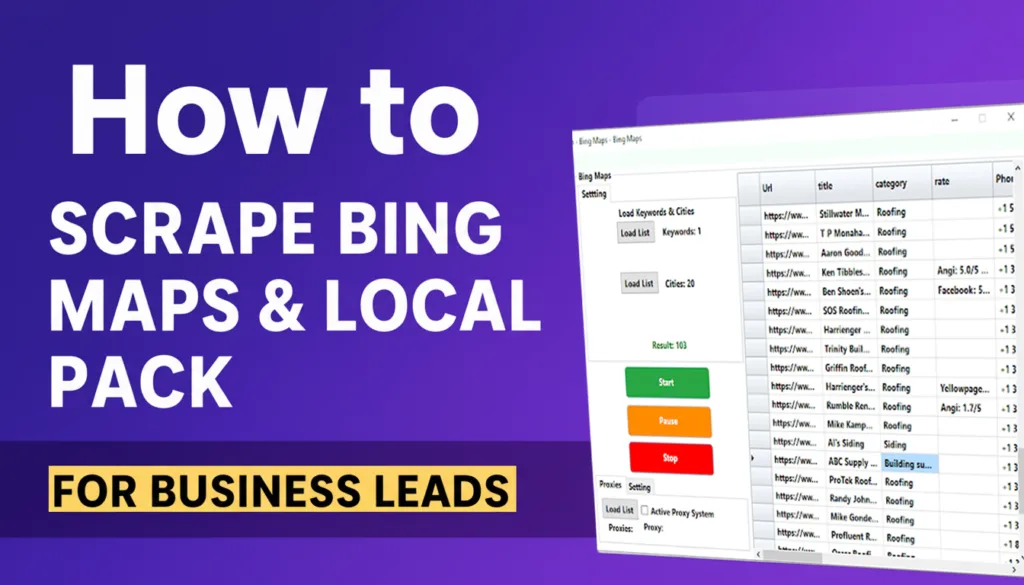

AML Architecting a Local Data Pipeline: Scraping Bing Maps and Local Pack

Introduction

In the world of B2B lead generation and programmatic SEO, high-fidelity location data is the first resource. Although Google Maps will be the default target, its high saturation and strong anti-scraping measures render Bing Maps and the Bing Local Pack to be very effective data mining options.

This technical guide discusses the architecture of sourcing of structured data on Bing. We are going to discuss the definition of data schema, manual validation (prototyping) and automated extraction processes with proxy management and data normalization.

1 The Data Schema

An extractor will require the definition of the target data model before it is engineered. The Bing Maps gives semi-structured HTML data that needs to be processed and converted into a rigid schema.

A typical JSON object representing one entity should contain:

“entityid”: “UUIDv4“,

“businessname”: “String”,

“category”: “String (Array)”,

“contact”: {

“phoneraw”: “String”,

“phonenormalized”: “E.164 Format“,

“websiteurl”: “URL String”,

sociallinks: LinkedIn, twitter, facebook.

},

“location”: {

“addressfull”: “String”,

“street”: “String”,

“city”: “String”,

“stateprovince”: “String”,

“zipcode”: “String”,

“countryiso”: “ISO 3166-1 alpha-2“

},

“metrics”: {

“rating”: “Float (0.0 – 5.0)”,

“reviewcount”: “Integer”

},

“metadata”: {

“source”: “Bing Maps”,

“scrapedat”: “ISO 8601 Timestamp“

}

}

Technical Note: The best deduplication (unique constraint violation) key to use in your database is a hash of phonenormalized + domainname.

2. Phase I: Manual validation and DOM inspection

Before scaling a scraper, manual validation would be performed in order to know the DOM structure and the availability of data. It is the “MVP” stage.

The Bing Local Pack vs. Maps Interface

Local Pack ( SERP ) It appears in normal search results.

DOM Characteristic Loads via initial server-side rendering, expanding via AJAX.

Use Case 仅Top-ranking, high-intent results.

Inspection Workflow:

Open DevTools (F12) on a target listing.

Find the container classes of the anchor tags [title, phone, and website] and any lazy-loading features (elements that are not visible until scrolling or clicking).

3. Phase II: Automated Extraction Architecture

To get above a handful of dozens of records, it is time to deploy an automated pipeline. The design of a robust Bing Maps Scraper.

A. Input Vector Definition

Define your search parameters by using a coordinate-based or keyword-based approach:

Seed Keywords: [plumber, SaaS, dentist]

Geo-Fencing: Use a radius, e.g. 10km, or a list of cities of interest.

Query Construction: Use concatenation to be precise: {keyword} in {city}, {state}

B. Request architecture and Anti-Bot Evasion

Bing implements rate limiting and IP fingerprinting. Your scraper solution should consider the following:

Proxy Rotation:

Do not use one fixed IP.

Use a middleware to rotation Residential Proxies on each N request.

Logic: When Response Code = 429 (Too Many Requests), Rotate Proxy and Exponential Backoff Retry.

Header Management: Rotate User-Agent strings to simulate different browser versions (Chrome, Firefox, Edge).

Ensure Referer Headers simulate normal navigation.

Concurrency Control:

Limit one concurrent thread to avoid Denial of Service (DoS) protection.

Letters: The Extraction Logic (Pseudocode):

Extraction Logic scrapebinglocation(query):

Started Headless Browser Proxied.

init driver proxy=getnextproxy () initializedriver(proxy=getnextproxy ())

try:

driver.goto(f"https://www.bing.com/maps?q={query}")

waitforselector(".entity-listing-container")

results = []

listings = driver.findelements(".listing-card")

for card in listings:

data = {

"name": card.select(".business-name").text,

"phone": card.select(".phone-number").text,

"website": extracthref(card.select(".website-btn")),

"rating": parsefloat(card.select(".rating").text)

}

results.append(normalizedata(data))

return results

except TimeoutException:

logerror("DOM failed to load")

return None

ETL: Transformation & Loading

Raw scraped data is hardly production-ready. You will need to add an ETL (Extract, Transform, Load) layer.

Phone Numbers: When scraping a media house with overlapping coverage (e.g. New York vs Manhattan), you will get duplicates.

Algorithmsion

Create a hash (SHA-256) of phone + cleanwebsite.

Upsert (Update/Insert) into the database by this hash.

If it exists, update the last_seen date; otherwise, insert it.

Data Stack Use Cases

After your pipeline is running, data can be used in the following ways:

Programmatic SEO: Use the data to generate landing pages using local service data (e.g. Top 10 Coffee Shops in Cairo).

Competitive Intelligence: Use the data to understand the velocity of reviews (number of reviews changing over time) to identify new competitors that a web development agency should focus on.

Lead Scoring: Use the data to filter leads by their Digital Maturity (businesses with high ratings but no website).

Compliance and Governance

Technical capability does not mean they have the legal immunity. Best practices in web governance:

Respect robots.txt: Check Bing has robot rules on crawl delays.

PII Sanitization: Make sure that you are scraping business data, not personal individual data, to be in line with the GDPR/CCPA.

Request Throttling: Do not hammer the target server. Ethical scraping implies a slumber between requests.

Conclusion

The transition to a managed data pipeline is necessary to do a Bing Maps scraper to a simple script execution. With proxy management, sound DOM parsing and extreme data normalization you turn web data which isn’t structured into an asset which can be answered with queries and is of value to business intelligence.

Leave a Reply