In the case of the Canadian real estate industry, the agent and brokerage information is also one of the richest in Realtor.ca. A scraper on Realtor.ca can assist you in harvesting this data on a bigger scale, tidy, consistent, and at the point of outreach or analysis.

This tutorial takes you through the very specific steps in the workflow (open, select filters, copy the URL, add it to your scraper, start, export) and demonstrates how to make the output, as generated, humane and helpful.

Why scrape Realtor.ca?

- High-value contacts: Find agents and broker offices, role, review, and social connections.

- Niche prospecting: Develop accurate lists by city, province, specialty or brokerage.

- Flexible exports: Export your results as XLSX, CSV or JSON to your CRM, spreadsheets or pipelines.

- Time Saving: Automate copy-pasting and save time in minutes.

Note: It is advisable always to go through the Terms of Use and applicable laws in the site before scraping. Respond to the data responsibly and honor do-not-contact requests.

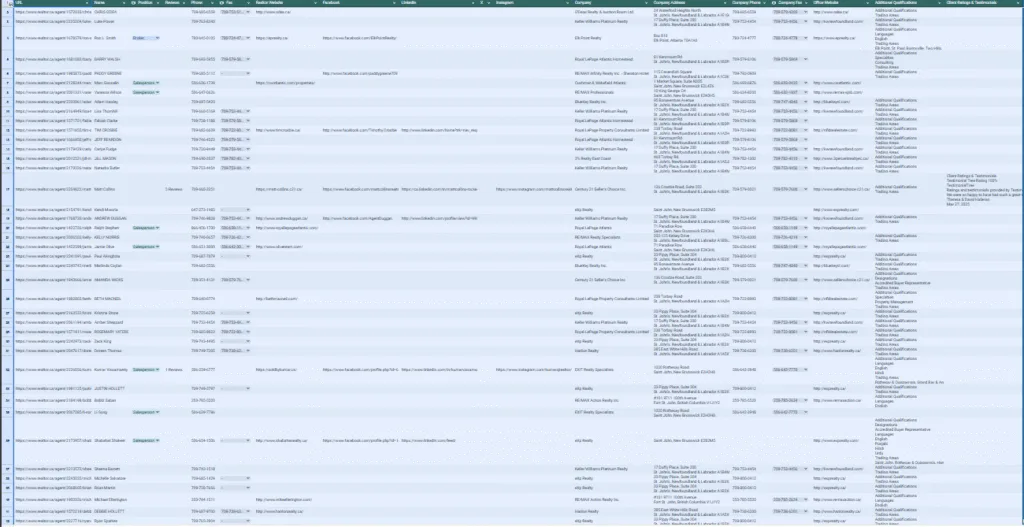

What data you can capture (fields)

The following fields can be exported using a well configured Realtor.ca scraper:

- URL

- Name

- Position (e.g., Sales Representative, Broker)

- Reviews (aggregate count or rating where available)

- Phone

- Fax

- Realtor Website (agent’s personal site)

- X (formerly Twitter)

- Company (brokerage)

- Company Address

- Company Phone

- Company Fax

- Office Website

- Additional Qualifications (designations, languages, specialties)

- Client Review & Testimonials.

Note: All profiles do not have all the fields. Your scraper should leave blanks where data isn’t present so your export stays clean and consistent.

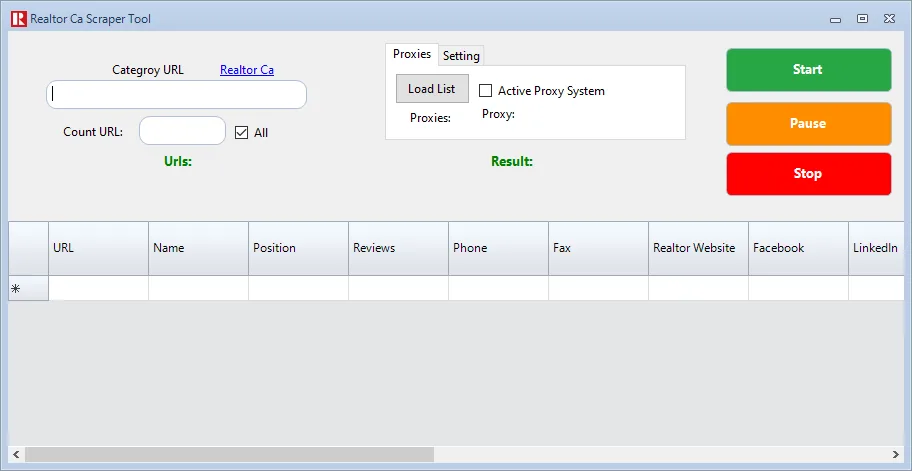

How to use a scraper of Realtor.ca step-by-step.

Open Realtor.ca: Visit the site and go to the area of agent or brokerage search. Search filters generally include location and in some cases specialty.

Select category and location: To “category” consider your target group, Agents, Brokers or Offices (however you filter it). Location Choose a city, province, or a larger area (e.g., Toronto, ON or British Columbia). Filter using any additional filters (language, specialty, etc.) where possible.

Copy the results page link: In the results loading, copy the complete address of your browser. That URL decodes your filters (category + location + any other parameter) and that is what you should feed on your scraper.

Include scraper to your Realtor.ca: Enter the URL in the scraper of Realtor.ca. In case you would like to scale, add several links, e.g., Vancouver agents, Calgary agents, Montreal agents because what you have will help you run several markets at once.

Start scraping: Click Start. The scraper will go through all the results, open the profile pages where necessary, and scrape the above fields. Ensure that your tool has pagination (Next pages) to ensure that you do not overlook listings.

Export your data: Once the run finishes, export your dataset to the format that best fits your workflow:

- XLSX to review a team rapidly and spreadsheets.

- CSV for CRM imports and email tools.

- JSON for developers and downstream automation.

Make the output human-friendly (and ready for action)

- Use clear headers: Keep the exact field names above so teammates instantly understand each column.

- Normalize phones: Make phones dialable and CRM-readable (i.e. +1-XXX-XXX-XXXX).

- De-duplicate records: Some agents may appear in multiple filtered views—dedupe by Name + Company + Phone or by profile URL.

- Label your part: Add two more columns- Target Category and Target Location so that you can slice lists in the future.

- Validate contact points: Run light validation on emails/phones to reduce bounces and bad numbers before outreach.

AI Niche Targeting (optional power-up)

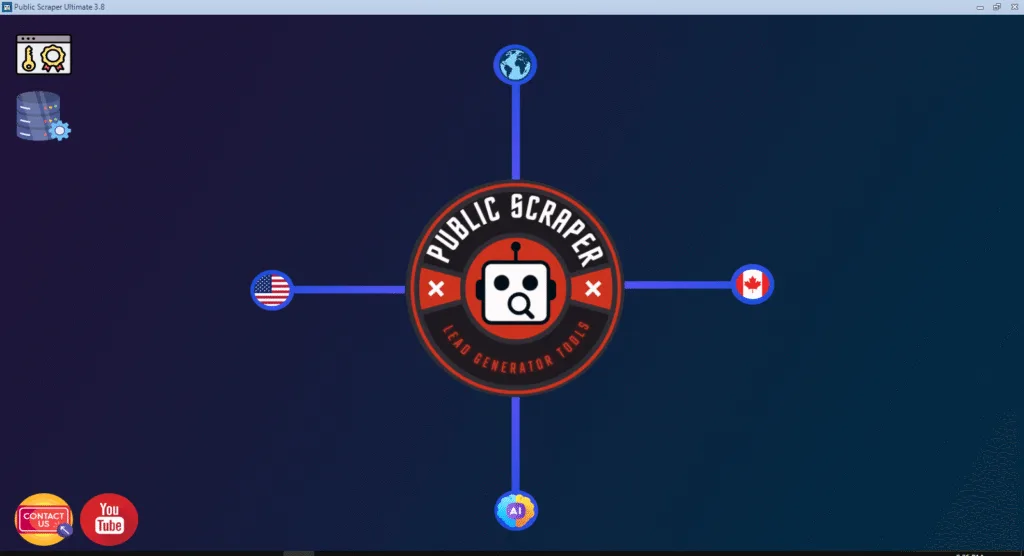

Want smarter prospecting? AI Niche Targeting to your workflow. All one needs to do is to simply describe what you sell (e.g., “CRM of solo agents,” “Media packages of luxury listings) and allow AI to make its recommendations on the types, specialties, and locations that might be interested in what you are offering. This will guide you to focus on high-fer, high-intent segments (e.g., luxury-designated agents in Toronto and Vancouver) to get your scraped list to make a more successful conversion and your outreach seem more relevant.

Why use Public Scraper Ultimate?

What is so good about Public Scraper Ultimate? Public scraper Ultimate offers the best of both worlds, in case you require something more than one off list. In addition to Realtor.ca, it has multiple directory scrapers and automation options, where you can queue numerous category/location URLs, run them in bulk and one-click export to XLSX, CSV, or JSON. Since lead generation through the market mapping, Public Scraper Ultimate can save you hours each week and provide just clean adhered structured data your sales and marketing teams can rely on.

Here is the text formatted with key concepts and actions bolded for quick scanning:

Example Workflows

- Filter agent names, positions, and socials: To recruit agents, the agent names, positions, and socials are filtered by city/province and scraped. Additional Qualifications Priority to align with your perfect profile.

- Vendor outreach (photography, staging, CRMs): Use AI Niche Targeting to narrow down on the ones that are most interested in the service (e.g., an agent with a high listing volume or luxury specialization).

- Competitive research: Capture Company, Company Address, and Office Website to trace the presence of the brokerage and analysis of the coverage of the area.

- Reputation Reviews: Drag Pull Reviews and Client Ratings and Testimonials to a sentiment dashboard to monitor the brand.

Best Practices / Compliance Hints

- Test small: Use a small number of pages in one city to test fields mapping and then scale up.

- Respective rate limits: Polite delays and concurrency controls to prevent throttling.

- Treat missing fields in a friendly way: Avoid inserting placeholders that will make CSV imports fail, instead, leave empty cells empty.

- Keep a changelog: As you get better at scraping (e.g., improved social detection), record the date and the change so that you can have quality data over time.

- Compliance audit: Read Terms of the site and relevant laws. Ethical scraped contact data use and respect opt-out requests.

Troubleshooting Quick Wins

- Partial results? Make sure that the scraper is configured to follow profile pages (not only skim the results grid).

- Duplicates? Normalize names (strip spaces, normalize case) and de-dup by Name + Phone.

- Messy phones/faxes? View Processes Run a basic post-processing script to standardise North American numbers.

- Empty social links? Socials are not mentioned by every agent. Take into account enrichment later by the agent, in case he has a Realtor Website (and can do it).