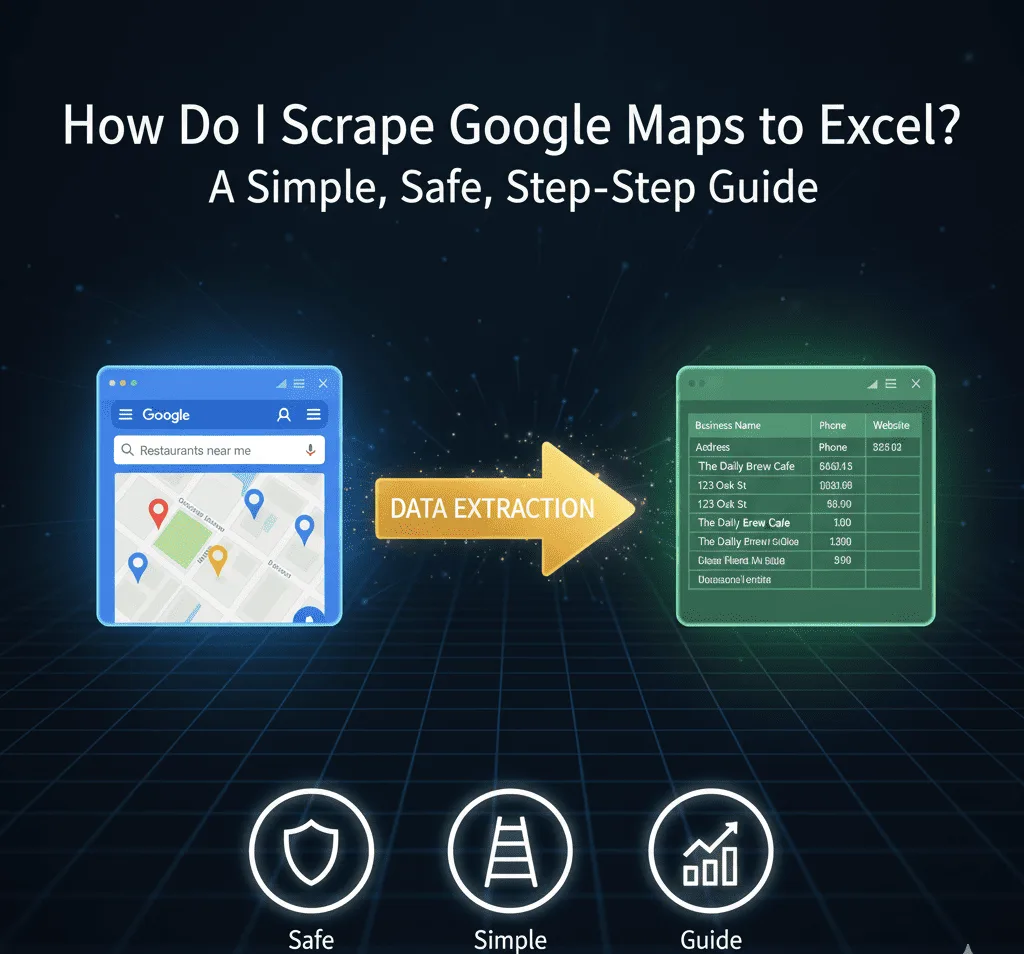

Local SEO, Map to Spreadsheet: Technical Guide to Scraping Google Maps Data

In the sales-oriented business environment, local SEO, and market research area, data is just as valuable as it is organized. A Google Maps search result is visual and interactive however not computable. You cannot scrape Google Maps by filtering the map to Review Count > 50 or sorting by Missing Website and then write a single script to do it because first you need to define your target schema.

Phase 1: Schema Design and Data Modeling

To write a single scraper or scraper module, you need to define your target schema that is, you need to convert unstructured web data into a structured one, e.g., an Excel spreadsheet. Removing all of this normally results in bloated data that crashes Excel. Excel You should open Excel and chart the Google Maps elements to particular columns in your output file. The attributes that you are planning to query later should be listed as the columns:

- Primary Keys: Business Name, Place ID (where available).

- Contact Protocols: Phone Number (formatted), Website URL.

- Quality Metrics: Star Rating, Total Review Count.

- Geospatial Data: Street Address, City, State / Province, Zip/Postal Code, Country.

- Meta: Google Maps Permalink (to be verified by hand).

Pro Tip: It is always good to separate the elements of the address. Having the information as 123 Main St, Austin, TX stored in one cell would not allow it to be easily filtered by City later. This parsing is normally done automatically by dedicated scrapers.

Phase 2: The Acquisition Method

There are two major methods of data acquisition:

1. Manual Transcription (The Brute Force Method)

In this case, one manually clicks a pin, copies the text, and pastes it in Excel.

- Complexity: Linear (O(n)). Time scaling – with each record we add time scales directly, and the probability of human error (copy-paste errors) is high, and it cannot scale past approximately 50 records.

- Drawbacks – Scaling Because of its time-dependent nature, time scales with every record we add, and it is also prone to human error (copy-paste errors) and cannot scale beyond a certain size of about 50 records.

Automated Extraction (The “Headless” Method)

This involves a software that is used to crawl through the map and process the HTML/JSON and catalog the data automatically. This is the area in which such tools as Public Scraper Ultimate act as a force multiplier.

Phase 3: The Execution Workflow

As our reference implementation is the Google Maps Scraper, the following are the steps involved in the technical implementation of creating a clean dataset.

Step 1: Query Construction and Geotargeting

The best output is determined by how specific your input is.

- Keyword Logic: Algorithms are literal. The search on Roofing will bring suppliers, but the search on Roof Repair will give contractors. Test options to suit purpose:

- Geo-Fencing: You can scrape just the points in your Phase 1 schema, or you can scrape a list of cities.

- Tech Tip: When scraping an enormous area (such as an entire state), split the job in major cities to avoid timeouts and scrape a wide area. Unless you require the array of Opening Hours, then do not bother to request it. This lowers the throughput of each individual entity.

Step 3: Rate Limiting and Concurrency

This is the most important technical environment.

- Delays: Web scraping must be polite. Delay between requests (2-5s). This replicates human behavior and will not make your IP look like an aggressive traffic user.

- Duplication Logic: This feature will unlock duplicate checking. When you search “Pizza Brooklyn” and then Italian Food Brooklyn” then there will be overlap. The tool is to automatically ignore the second time around of the same business.

Step 4: The Canary (Preview)

- Test: NEver start a whole batch at once. Conduct a canary test – Scrape one keyword in one city during 1 minute.

- Check results: Do phone numbers format correctly? Have the protocol (https://) in the URL?

- Test production. In case the preview data matches your Excel columns, you are production ready. Scraper will repeat your logic of key word/ location and then extract the results to memory. When it is ready, save the data.

- Format: Select.xlsx (Excel) to work with that data immediately or.csv (Comma Separated Values) to work with such data later.

Phase 4: Data Hygiene and Analysis

After completing the previous step, the work with data begins.

- Normalization: All phone numbers should be in a typical format (e.g., (555) 123-4567). Web URLsMust be clean (no trailing slashes).

- Deduplication: Use Excel Data -> Remove Duplicates as a final failover.

- Enrichment: Add a column called Status to track your workflow (Ready, Contacted, Converted).

- Segmentation: Use PivotTables to analyze the density of results. Examples

Phase 5: Compliance and Architecture

When scraping, you are dealing with public infrastructure.

- Public Data: Only deal with publicly available business attributes (NAP: Name, Address, Phone). This is information that businesses desire to be discovered.

- GMB Awareness: It is vital to know the source. These are the findings of Google Business Profile (GMB). To fully understand how this particular structure of data functions, consult the following guide on What is a GMB scraper?.

- Legal Posture: It is best to always observe the best practices in terms of request rates and data privacy. To dissect the legal underpinning of it, see Is scraping Google Maps legal?.

Summary

Scraping Google Maps is more than a question of retrieving data; it is the transforming of the web into a well-organized, searchable database. With schema planning, a tool such as the Google Maps Scraper and simple data hygiene rules, you can also have a sustainable pipeline to market intelligence.

To get a wider, platform-independent perspective on this approach, however, you can also watch this tutorial on How to Scrape Google Maps.

Leave a Reply